Packet tracer: packet switching simulation

Packet Tracer is a fun, take-home, flexible software program that allows you to experiment with network behavior, build network models, and ask ‘what if’ questions. In this activity, you will explore how Packet Tracer serves as a modeling tool for network representations. While doing so, you will explore a simulation of how packets are created and sent across the network traveling from source device to destination device.

August 19, 2017

Design IoT Projects with Packet Tracer 7.1

January 6, 2010

Exploring Windows 7

Windows Management.

By now, you’ve probably seen that Windows 7 does a lot to make window management easier: you can “dock” a window to the left or right half of the screen by simply dragging it to the edge; similarly, you can drag the window to the top of the screen to maximize it, and double-click the window top / bottom border to maximize it vertically with the same horizontal width. What you might not know is that all these actions are also available with keyboard shortcuts:

-

Win+Left Arrow and Win+Right Arrow dock;

-

Win+Up Arrow and Win+Down Arrow maximizes and restores / minimizes;

-

Win+Shift+Up Arrow and Win+Shift+Down Arrow maximizes and restores the vertical size.

This side-by-side docking feature is particularly invaluable on widescreen monitors – it makes the old Windows way of shift-clicking on two items in the taskbar and then using the context menu to arrange them feel really painful.

Display Projection.

Had enough of messing around with weird and wonderful OEM display driver utilities to get your notebook display onto an external projector? In that case, you’ll be pleased to know that projection is really quick and simple with Windows 7. Just hit Win+P, and you’ll be rewarded by the following pop-up window:

Use the arrow keys (or keep hitting Win+P) to switch to “clone”, “extend” or “external only” display settings. You can also access the application as displayswitch.exe.

If you want broader control over presentation settings, you can also press Win+X to open the Windows Mobility Center, which allows you to turn on a presentation “mode” that switches IM clients to do not disturb, disables screensavers, sets a neutral wallpaper etc. (Note that this feature is also available in Windows Vista.)

Cut Out The Clutter.

Working on a document in a window and want to get rid of all the extraneous background noise? Simply hit Win+Home to minimize all the non-active background windows, keeping the window you’re using in its current position. When you’re ready, simply press Win+Home again to restore the background windows to their original locations.

Multi-Monitor Windows Management.

The earlier tip on window management showed how you can dock windows within a monitor. One refinement of those shortcuts is that you can use Win+Shift+Left Arrow and Win+Shift+Right

Arrow to move windows from one monitor to another – keeping them in the same relative location to the monitor’s top-left origin.

Command Junkies Only.

One of the most popular power toys in Windows XP was “Open Command Prompt Here”, which enabled you to use the graphical shell to browse around the file system and then use the context menu to open a command prompt at the current working directory. In Windows 7 (and in Windows Vista, incidentally – although not many folk knew about it), you can simply hold the Shift key down while selecting the context menu to get exactly the same effect. If the current working directory is a network location, it will automatically map a drive letter for you.

It’s a Global Village. If you’ve tried to change your desktop wallpaper, you’ve probably noticed that there’s a set of wallpapers there that match the locale you selected when you installed Windows. (If you picked US, you’ll see beautiful views of Crater Lake in Oregon, the Arches National Park, a beach in Hawai’i, etc.) In fact, there are several sets of themed wallpapers installed based on the language you choose, but the others are in a hidden directory. If you’re feeling in an international mood, simply browse to C:\Windows\Globalization\MCT and you’ll see a series of pictures under the Wallpaper directory for each country. Just double-click on the theme file in the Theme directory to display a rotation through all the pictures for that country. (Note that some countries contain a generic set of placeholder art for now.)

The Black Box Recorder.

Every developer wishes there was a way that an end-users could quickly and simply record a repro for the problem that they’re running into that is unique to their machine. Windows 7 comes to the rescue! Part of the in-built diagnostic tools that we use internally to send feedback on the product, the Problem Steps Recorder provides a simple screen capture tool that enables you to record a series of actions. Once you hit “record”, it tracks your mouse and keyboard and captures screenshots with any comments you choose to associate alongside them. Once you stop recording, it saves the whole thing to a ZIP file, containing an HTML-based “slide show” of the steps. It’s a really neat little tool and I can’t wait for it to become ubiquitous on every desktop! The program is called psr.exe; you can also search for it from Control Panel under “Record steps to reproduce a problem”.

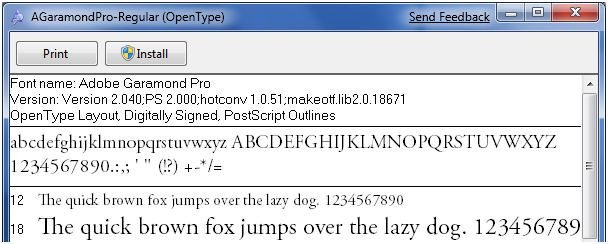

The Font of All Knowledge. Now font installation is really easy – there is an “Install” button to the font viewer applet that takes care of the installation process:

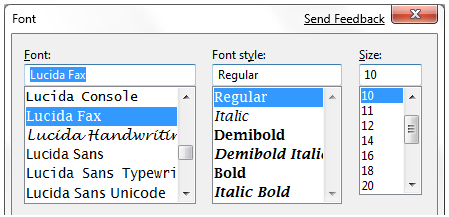

There are lots of other new features built into Windows 7 that will satisfy those of a typographic bent, incidentally – grouping multiple weights together, the ability to hide fonts based on regional settings, a new text rendering engine built into the DirectWrite API, and support in the Font common file dialog for more than the four “standard” weights. For example:

Gabriola.

As well as the other typographic features mentioned above, Windows 7 includes Gabriola, an elaborate display type from the Tiro Typeworks foundry that takes advantage of OpenType Layout to provide a variety of stylistic sets, flourishes and ornamentation ligatures:

Who Stole My Browser? If you feel like Internet Explorer is taking a long time to load your page, it’s worth taking a look at the add-ons you have installed. One of the more helpful little additions in Internet Explorer 8 is instrumentation for add-on initialization, allowing you to quickly see whether you’re sitting around waiting for plug-ins to load. Just click Tools / Manage Add-ons, and then scroll right in the list view to see the load time. On my machine, I noticed that the Research add-on that Office 2007 installs was a particular culprit, and since I never use it, it was simple to disable it from the same dialog box.

Rearranging the Furniture.

Unless you’ve seen it demonstrated, you may not know that the icons in the new taskbar aren’t fixed in-place. You can reorder them to suit your needs, whether they’re pinned shortcuts or running applications. What’s particularly nice is that once they’re reordered, you can start a new instance of any of the first five icons by pressing Win+1, Win+2, Win+3 etc. I love that I can quickly fire up a Notepad2 instance on my machine with a simple Win+5 keystroke, for instance.

What’s less well-known is that you can similarly drag the system tray icons around to rearrange their order, or move them in and out of the hidden icon list. It’s an easy way to customize your system to show the things you want, where you want them.

Installing from a USB Memory Stick.

To install Windows 7 Beta on this machine to replace the pre-installed Windows XP environment. Like most netbook-class devices, this machine has no built-in media drive, and nor did I have an external USB DVD drive available to boot off. The solution: I took a spare 4GB USB 2.0 thumbdrive, reformatted it as FAT32, and simply copied the contents of the Windows 7 Beta ISO image to the memory stick using xcopy e:\ f:\ /e /f (where e: was the DVD drive and f: was the removable drive location). Not only was it easy to boot and install from the thumbdrive, it was also blindingly fast: quicker than the corresponding DVD install on my desktop machine.

It’s also worth noting in passing that Windows 7 is far better suited to a netbook than any previous operating system: it has a much lighter hard drive and memory footprint than Windows Vista, while also being able to optimize for solid state drives (for example, it switches off disk defragmentation since random read access is as fast as sequential read access, and it handles file deletions differently to minimize wear on the solid state drive).

I Want My Quick Launch Toolbar Back!

You might have noticed that the old faithful Quick Launch toolbar is not only disabled by default in Windows 7, it’s actually missing from the list of toolbars. As is probably obvious, the concept of having a set of pinned shortcut icons is now integrated directly into the new taskbar. Based on early user interface testing, we think that the vast majority of users out there (i.e. not the kind of folk who read this blog, with the exception of my mother) will be quite happy with the new model, but if you’re after the retro behavior, you’ll be pleased to know that the old shortcuts are all still there. To re-enable it, do the following:

-

Right-click the taskbar, choose Toolbars / New Toolbar

-

In the folder selection dialog, enter the following string and hit OK:

%userprofile%\AppData\Roaming\Microsoft\Internet Explorer\Quick Launch

-

Turn off the “lock the taskbar” setting, and right-click on the divider. Make sure that “Show text” and “Show title” are disabled and the view is set to “small icons”.

-

Use the dividers to rearrange the toolbar ordering to choice, and then lock the taskbar again.

If it’s not obvious by the semi-tortuous steps above, it’s worth noting that this isn’t something we’re exactly desperate for folks to re-enable, but it’s there if you really need it for some reason. Incidentally, we’d love you to really try the new model first and give us feedback on why you felt the new taskbar didn’t suit your needs.

It’s a Drag.

Much play has been made of the Jump Lists feature in Windows 7, allowing applications like Windows Live Messenger to offer an easy task-based entry point. Jump lists replace the default right-click context menu in the new taskbar; another way to access them (particularly useful if you’re running Windows 7 on a one-button MacBook) is by left-clicking and dragging up in a kind of “swooshing” motion. This was designed for touch-enabled devices like the beautiful HP TouchSmart all-in-one PC, where the same gesture applies.

Another place where you can “swoosh” (not an official Microsoft term) is the IE 8 address bar, where the downward drag gesture brings up an expanded list containing the browser history, favorites and similar entries. The slower you drag, the cooler the animation!

Standards Support. Every review of Windows 7 that I’ve seen has noted the revamped WordPad and Paint applets that add an Office-like ribbon to expose their functionality. Few, however, have noticed one small but hopefully appreciated feature: WordPad can now read and write both the Word 2007-compatible Office Open XML file format but also the OpenDocument specification that IBM and Sun have been advocating:

Windows Vista-Style Taskbar. I wasn’t initially a fan of the Windows 7 taskbar when it was first introduced in early Windows 7 builds, but as the design was refined in the run up to the beta, I was converted and now actively prefer the new look, particularly when I’ve got lots of windows open simultaneously. For those who really would prefer a look more reminiscent of Windows Vista, the good news is that it’s easy to customize the look of the taskbar to more closely mirror the old version:

To achieve this look, right-click on the taskbar and choose the properties dialog. Select the “small icons” checkbox and under the “taskbar buttons” setting, choose “combine when taskbar is full”. It’s not pixel-perfect in accuracy, but it’s close from a functionality point of view.

Peeking at the Desktop. While we’re on the taskbar, it’s worth noting a few subtleties. You’ve probably seen the small rectangle in the bottom right hand corner: this is the feature we call “Aero Peek”, which enables you to see any gadgets or icons you’ve got on your desktop. I wanted to note that there’s a keyboard shortcut that does the same thing – just press Win+Space.

Running with Elevated Rights. Want to quickly launch a taskbar-docked application as an administrator? It’s easy – hold down Ctrl+Shift while you click on the icon, and you’ll immediately launch it with full administrative rights (assuming your account has the necessary permissions, of course!)

One More of the Same, Please.

I’ve seen a few folk caught out by this one. If you’ve already got an application open on your desktop (for example, a command prompt window), and you want to open a second instance of the same application, you don’t have to go back to the start menu. You can simply hold down the Shift key while clicking on the taskbar icon, and it will open a new instance of the application rather than switching to the existing application. For a keyboard-free shortcut, you can middle-click with the third mouse button to do the same thing. (This trick assumes that your application supports multiple running instances, naturally.)

Specialized Windows Switching.

Another feature that power users will love is the ability to do a kind of “Alt+Tab” switching across windows that belong to just one application. For example, if you’ve got five Outlook message windows open along with ten other windows, you can quickly tab through just the Outlook windows by holding down the Ctrl key while you repeatedly click on the single Outlook icon. This will toggle through each of the five Outlook windows in order, and is way faster than opening Alt+Tab and trying to figure out which of the tiny thumbnail images relates to the specific message you’re trying to find.

Walking Through the Taskbar.

Another “secret” Windows shortcut: press Win+T to move the focus to the taskbar. Once you’re there, you can use the arrow keys to select a particular window or group and then hit Enter to launch or activate it. As ever, you can cancel out of this mode by hitting the Esc key. I don’t know for sure, but I presume this shortcut was introduced for those with accessibility needs. However, it’s equally valuable to power users – another good reason for all developers to care about ensuring their code is accessible.

Pin Your Favorite Folders.

If you’re always working in the same four or five folders, you can quickly pin them with the Explorer icon on the taskbar. Hold the right-click button down and drag the folder to the taskbar, and it will be automatically pinned in the Explorer Jump List.

Starting Explorer from “My Computer”. If you spend more time manipulating files outside of the documents folders than inside, you might want to change the default starting directory for Windows Explorer so that it opens at the Computer node:

To do this, navigate to Windows Explorer in the Start Menu (it’s in the Accessories folder). Then edit the properties and change the target to read:

%SystemRoot%\explorer.exe /root,::{20D04FE0-3AEA-1069-A2D8-08002B30309D}

If you want the change to affect the icon on the taskbar, you’ll need to unpin and repin it to the taskbar so that the new shortcut takes affect. It’s worth noting that Win+E will continue to display the documents library as the default view: I’ve not found a way to change this from the shell at this time.

ClearType Text Tuning and Display Color Calibration. If you want to tune up your display for image or text display, we have the tools included out of the box. It’s amazing what a difference this makes: by slightly darkening the color of the text and adjusting the gamma back a little, my laptop display looks much crisper than it did before. You’d adjust the brightness and contrast settings on that fancy 42″ HDTV you’ve just bought: why wouldn’t you do the same for the computer displays that you stare at every day?

Check out cttune.exe and dccw.exe respectively, or run the applets from Control Panel.

ISO Burning. Easy to miss if you’re not looking for it: you can double-click on any DVD or CD .ISO image and you’ll see a helpful little applet that will enable you to burn the image to a blank disc. No more grappling for shareware utilities of questionable parentage!

Windows Movie Maker.

Windows 7 doesn’t include a movie editing tool – it’s been moved to the Windows Live Essentials package, along with Photo Gallery, Mail and Messenger. Unfortunately, Windows Live Movie Maker is currently still in an early beta that is missing most of the old feature set (we’re reworking the application), and so you might be feeling a little bereft of options. It goes without saying that we intend to have a better solution by the time we ship Windows 7, but in the meantime the best solution for us early adopters is to use Windows Movie Maker 2.6 (which is essentially the same as the most recent update to the Windows XP version). It’s missing the full set of effects and transitions from the Windows Vista version, and doesn’t support HD editing, but it’s pretty functional for the typical usage scenario of home movie editing.

Download Windows Movie Maker 2.6 from here:

http://microsoft.com/downloads/details.aspx?FamilyID=d6ba5972-328e-4df7-8f9d-068fc0f80cfc

Hiding the Windows Live Messenger Icon.

Hopefully your first act after Windows 7 setup completed was to download and install the Windows Live Essentials suite of applications (if not, then you’re missing out on a significant part of the Windows experience). If you’re a heavy user of IM, you may love the way that Windows Live Messenger is front and central on the taskbar, where you can easily change status and quickly send an IM to someone:

On the other hand, you may prefer to keep Windows Live Messenger in the system tray where it’s been for previous releases. If so, you can fool the application into the old style of behavior. To do this, close Windows Live Messenger, edit the shortcut properties and set the application to run in Windows Vista compatibility mode. Bingo!

Enjoy The Fish. I’m surprised that not many people seem to have caught the subtle joke with the Siamese fighting fish that is part of the default background, so I’ll do my part at keeping the secret hidden. Check out wikipedia for a clue.

When All Else Fails…

There are always those times when you’re in a really bad spot – you can’t boot up properly, and what you really want is something you can quickly use to get at a command prompt so you can properly troubleshoot. Windows 7 now includes the ability to create a system repair disc, which is essentially a CD-bootable version of Windows that just includes the command prompt and a suite of system tools. Just type “system repair disc” in the Start Menu search box, and you’ll be led to the utility.

The following table contains a selection of the cmdlets that ship with PowerShell noting the most similar commands in other well known command line interpreters.

| Windows PowerShell (Cmdlet) |

Windows PowerShell |

cmd.exe / COMMAND.COM |

Bash |

Description |

| Get-Location |

gl, pwd |

Display the current directory/present working directory. |

||

| Set-Location |

sl, cd, chdir |

cd |

Change the current directory |

|

| Clear-Host |

cls, clear |

Clear the screen |

||

| Copy-Item |

cpi, copy, cp |

Copy one or several files / a whole directory tree |

||

| Get-Help |

help, man |

Help on commands |

||

| Remove-Item |

ri, del, erase, rmdir, rd, rm |

rm, rmdir |

Delete a file / a directory |

|

| Rename-Item |

rni, ren |

Rename a file / a directory |

||

| Move-Item |

mi, move, mv |

Move a file / a directory to a new location |

||

| Get-ChildItem |

gci, dir, ls |

List all files / directories in the (current) directory |

||

| Write-Output |

echo, write |

echo |

Print strings, variables etc. to standard output |

|

| Pop-Location |

popd |

popd |

Change the current directory to the directory most recently pushed onto the stack |

|

| Push-Location |

pushd |

pushd |

Push the current directory onto the stack |

|

| Set-Variable |

sv, set |

set |

Set the value of a variable / create a variable |

|

| Get-Content |

gc, type, cat |

Get the content of a file |

||

| Select-String |

Print lines matching a pattern |

|||

| Get-Process |

gps, ps |

tlist, tasklist |

List all currently running processes |

|

| Stop-Process |

spps, kill |

kill, taskkill |

kill |

Stop a running process |

| Tee-Object |

tee |

n/a |

Pipe input to a file or variable, then pass the input along the pipeline |

Examples

Examples are provided first using the long-form canonical syntax and then using more terse UNIX-like and DOS-like aliases that are set up in the default configuration. Examples that could harm a system include the -whatif parameter to prevent them from actually executing

- Stop all processes that begin with the letter “p”:

PS> get-process p* | stop-process -whatif

PS> ps p* | kill -whatif

- Find the processes that use more than 1000 MB of memory and kill them:

PS> get-process | where-object { $_.WS -gt 1000MB } | stop-process -whatif

PS> ps | ? { $_.WS -gt 1000MB } | kill -whatif

- Calculate the number of bytes in the files in a directory:

PS> get-childitem | measure-object -property length -sum

PS> ls | measure-object -p length -s

PS> dir | measure-object -p length -s

- Determine whether a specific process is no longer running:

PS> $processToWatch = get-process notepad

PS> $processToWatch.WaitForExit()

PS> $p = ps notepad

PS> $p.WaitForExit()

- Change the case of a string from lower to upper:

PS> “hello, world!”.ToUpper()

- Insert the string “ABC” after the first character in the word “string” to have the result “sABCtring”:

PS> “string”.Insert(1, “ABC”)

- Download a specific RSS feed and show the titles of the 8 most recent entries:

PS> $rssUrl = “http://blogs.msdn.com/powershell/rss.aspx”

PS> $blog = [xml](new-object System.Net.WebClient).DownloadString($rssUrl)

PS> $blog.rss.channel.item | select title -first 8

- Sets $UserProfile to the value of the UserProfile environment variable

PS> $UserProfile = $env:UserProfile

- Cast a .Net Namespace, and call a method exposed by the cast

PS> [System.Windows.Forms.MessageBox]::Show(“Hello, World!”)

File extensions

- PS1 – Windows PowerShell shell script

- PS1XML – Windows PowerShell format and type definitions

- PSC1 – Windows PowerShell console file

- PSD1 – Windows PowerShell data file (for Version 2)

- PSM1 – Windows PowerShell module file (for Version 2)

Few more tips and tricks.

- Windows key + Left: docks current window to the left side of the screen.

- Windows key + Right: docks current window to the right side of the screen.

- Windows key + Up: maximizes and/or restores foreground window.

- Windows key + Down: minimizes active window.

- If you want a more Vista-esque taskbar rather than the superbar (why anyone would revert is beyond me), right-click the Taskbar, go to Properties, check the ‘Use small icons’ option, then change the “Taskbar Buttons” option to ‘Never combine.’

- Windows 7 now burns ISO files themselves instead of making users grapple with third-party applications.

- For those lucky people with a multi-monitor setup, Windows + SHIFT + Left (or Right) will shift a window from monitor to monitor.

- Gone is the “Add Font” dialog. It’s been replaced with a much nicer system. Download a font and double-click it (you’ll be greeted with the familar font window, but you should notice it now has a ‘Install’ button).

- Windows 7 now includes Gabriola. This is an elaborate display typeface that takes advantage of OpenType layout to create a variety of stylistic sets.

- If you press Windows + 1, it will create a new instance of the first icon in the task bar. This is handy if you do a lot of coding and need to open several instances of a program.

- If you right-click on a Taskbar icon, it brings up the much talked about Jump List. However, the same can be done by clicking with the left mouse button and dragging the icon “out” (so to speak). This was specifically designed for touch-enabled computers, such as your lovely HP TouchSmart PC.

- To run a program as an Administrator, it’s now as easy as holding CTRL + SHIFT when you open the application.

- With Windows 7, you can now create a ‘System Repair Disc.’ This is a CD bootable version of Windows 7 that includes the command prompt and a suite of system tools. Very handy for those really tough spots (which, with this still in beta, could be just around the corner). To get to this, simply open the Start Menu and type: “system repair disc” in the search field.

November 7, 2009

Windows 7 Launch Party

We had a party on 29th October,2009 at my place which was “Windows 7 Launch party” in kolkata. The collegues of ISB&M and my friends were there and we had gathered to celebrate the Windows7 Launch Party in our place.

April 27, 2009

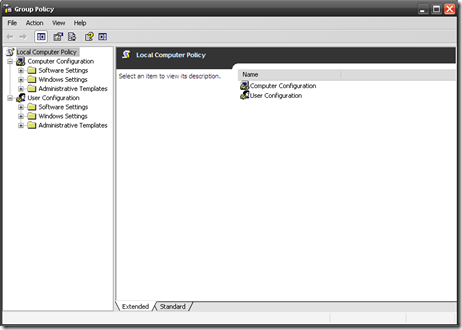

Using Group Policy in Windows (type gpedit.msc from run)

The Windows Operating Systems provide a centralized management and configuration solution called Group Policy. Group Policy is supported on Windows 2000, Windows XP Professional, Windows Vista, Windows Server 2003 and Windows Server 2008. Windows XP Media Center Edition and Windows XP Professional computers not joined to a domain can also use the Group Policy Object Editor to change the group policy for the individual computer. This local group policy however is much more limited than GPOs for Active Directory. Windows Home does not support Group Policy since it has no functionality to connect to a domain.

Usually Group Policy is used in an Enterprise type environment but it can be used in schools, small businesses, and other organizations as well. Group Policy can control a systems registry, NTFS security, audit and security policy, software installation, logon/logoff scripts, folder redirection, and Internet Explorer settings. For example, you can use it to restrict certain actions that pose a security risk like blocking the Task Manager, restricting access to certain folders, disabling downloaded executable files, etc.

Group Policy has both Active Directory and Local Computer Policy feasibility. Local Group Policy (LGP) using GPEDIT is a more basic version of the group policy used by Active Directory. In versions of Windows before Vista, LGP can configure the group policy for a single local computer, but unlike Active Directory group policy, can not make policies for individual users or groups. Windows Vista supports Multiple Local Group Policy Objects which allows setting local group policy for individual users. Windows Vista provides this ability with three layers of Local Group Policy objects: Local Group Policy, Administrator and Non-Administrators Group Policy, and user specific Local Group Policy. These layers of Local Group Policy objects are processed in order, starting with Local Group Policy, continuing with Administrators and Non-Administrators Group Policy, and finishing with user-specific Local Group Policy.

Primarily you see Group Policy used in an Active Directory solutions. Policy settings are actually stored in what are called Group Policy Objects (GPOs) and is internally referenced by a Globally Unique Identifier (GUID) which may be linked to multiple domains or organizational units. In this way, potentially thousands of machines or users can be updated via a simple change to a single GPO which can reduce administrative burden and costs associated with managing these resources.

Group Policies are analyzed and applied at startup for computers and during logon for users. The client machine refreshes most of the Group Policy settings periodically, the period ranging from 60-120 minutes and controlled by a configurable parameter of the Group Policy settings.

-

Configuring Group Policy Settings

-

Using Local Policy to Turn Off Windows Features

Group Policy Object Editor (GPEDIT) is the main application that is used to administer Group Policies. GPEDIT consists of two main sections: User Configuration and Computer Configuration. The User Configuration holds settings that are applied to users (at logon and periodic background refresh) while the Computer Configuration holds settings that are applied to computers (at startup and periodic background refresh). These sections are further divided into the different types of policies that can be set, such as Administrative Templates, Security, or Folder Redirection.

Group Policy settings are configured by navigating to the appropriate location in each section. For example, you can set an Administrative Templates policy setting in a GPO to prevent users from seeing the Run command. To do this you would enable the policy setting Remove Run Menu from Start Menu. This setting is located under User Configuration, Administrative Templates, Start Menu, and Task Bar. You edit most policy settings by double-clicking the title of the policy setting, which opens a dialog box that provides specific options. In Administrative Templates policy settings, for example, you can choose to enable or disable the policy setting or leave it as not configured. In other areas, such as Security Settings, you can select a check box to define a policy setting and then set available parameters.

The Group Policy Object Editor (GPEDIT) provides different ways of learning about the function or definition of specific policy settings. In most cases, when you can double click the title of a policy setting, the dialog box contains any relevant defining information about the policy setting. For Administrative Templates policy settings, the Group Policy Object Editor provides explanation text directly in the Web view of the console. You also can find this explanation text by double-clicking the policy setting and then clicking the Explain text tab. In either case, this text shows operating system requirements, defines the policy setting, and includes any specific details about the effect of enabling or disabling the policy setting.

Windows has a lot of features but you may not want all the features to be enable for all users. For example, the "Auto play" feature on the CD-ROM drives might be a setting you like to have turned off. Starting the policy edit is quite simple.

1. Click start and then run.

2. Type gpedit.msc and press enter.

3. The policy editor will start.

It should say in the top left corner "local computer policy". Make sure you take plenty of time to familiarize yourself with GPEDIT before you attempt any changes and be careful when you are setting options. You should read the help and understand each setting before you change it. Take the time to browse through all the main sections: "Computer Configuration" and "User Configuration". In both sections you will find the same subsections, some of which you do not need to touch. The one you will be most interested in for both User and Computer configuration is the section marked "Administrative Templates".

There are usually three settings for each policy:

1. Not configured – This is the default setting that means the policy is not over riding any configuration changes that have been made on the machine by the user. If you do not want to specify a certain setting, then the setting should be left with this option enabled.

2. Enabled – This means that the particular setting or option is set. For example "Enabled" against "Auto Play is disabled" means that Auto Play is disabled.

3. Disabled – This is the opposite of enabled and usually means you have turned off access to a feature that would normally be accessible.

There will be exceptions to some settings, where you are asked to actually enter text or choose from a list. Sometimes after you enable a setting there will be additional options you need to select.

For Windows 2000, you can see the policy explanation of what each change will do by right clicking the setting and choosing properties. The "explain" tab will give you the information. For Windows XP, select the "Extended" tab at the bottom of the Policy Editor window. It is also available from properties as per Windows 2000.

-

Policy Changes In Action

Many of the changes you make will take affect immediately after your computer applies the setting and the desktop can refresh. Other changes might not take complete effect until after your system has been completely restarted. You may want to always reboot your system after making the changes. No matter what make sure the change is what you want to happen otherwise you could accidently lock yourself out of something.

Policy Highlights –

Here are a couple of changes to the policy that you might want to consider making.

A) Set Internet Explorer Homepage. Stop your home page being changed. It is changed back each time you login. Will affect all users of your machine.

—- User Configuration: Windows Settings: Internet Explorer Maintenance: URLs: Home Page

B) Disable Auto Play. Turn off auto play of new CD-ROMs and music CDs:

—- User Configuration: Administrative Templates: System: Disable Auto Play

—- Computer Configuration: Administrative Templates: System: Disable Auto Play

C) Turn Off Personalised Menus. Does the start menu annoy you by not showing everything? Turn off personalised menus for all users by enabling this setting.

—- User Configuration: Administrative Templates: Windows Components: Start Menu and Task Bar: Disable Personalised Menus

April 21, 2009

Configuring Linux FTP Server

How To Download And Install VSFTPD:

Most RedHat and Fedora Linux software products are available in the RPM format. Downloading and installing RPMs isn’t hard. If you need a refresher, Chapter 6, on RPMs, covers how to do this in detail. It is best to use the latest version of VSFTPD.

When searching for the file, remember that the VSFTPD RPM’s filename usually starts with the word vsftpd followed by a version number, as in: vsftpd-1.2.1-5.i386.rpm.

How To Get VSFTPD Started:

You can start, stop, or restart VSFTPD after booting by using these commands:

[root@bigboy tmp]# service vsftpd start

[root@bigboy tmp]# service vsftpd stop

[root@bigboy tmp]# service vsftpd restart

To configure VSFTPD to start at boot you can use the chkconfig command.

[root@bigboy tmp]# chkconfig vsftpd on

Note: In RedHat Linux version 8.0 and earlier, VSFTPD operation is controlled by the xinetd process, which is covered in Chapter 16, "TELNET, TFTP, and XINETD." You can find a full description of how to configure these versions of Linux for VSFTPD in Appendix III, "Fedora Version Differences."

Testing the Status of VSFTPD:

You can always test whether the VSFTPD process is running by using the netstat -a command which lists all the TCP and UDP ports on which the server is listening for traffic. This example shows the expected output.

[root@bigboy root]# netstat -a | grep ftp

tcp 0 0 *:ftp *:* LISTEN

[root@bigboy root]#

If VSFTPD wasn’t running, there would be no output at all.

The vsftpd.conf File:

VSFTPD only reads the contents of its vsftpd.conf configuration file only when it starts, so you’ll have to restart VSFTPD each time you edit the file in order for the changes to take effect.

This file uses a number of default settings you need to know about.

> VSFTPD runs as an anonymous FTP server. Unless you want any remote user to log into to your default FTP directory using a username of anonymous and a password that’s the same as their email address, I would suggest turning this off. The configuration file’s anonymous_enable directive can be set to no to disable this feature. You’ll also need to simultaneously enable local users to be able to log in by removing the comment symbol (#) before the local_enable instruction.

> VSFTPD allows only anonymous FTP downloads to remote users, not uploads from them. This can be changed by modifying the anon_upload_enable directive shown later.

> VSFTPD doesn’t allow anonymous users to create directories on your FTP server. You can change this by modifying the anon_mkdir_write_enable directive.

> VSFTPD logs FTP access to the /var/log/vsftpd.log log file. You can change this by modifying the xferlog_file directive.

> By default VSFTPD expects files for anonymous FTP to be placed in the /var/ftp directory. You can change this by modifying the anon_root directive. There is always the risk with anonymous FTP that users will discover a way to write files to your anonymous FTP directory. You run the risk of filling up your /var partition if you use the default setting. It is best to make the anonymous FTP directory reside in its own dedicated partition.

The configuration file is fairly straight forward as you can see in the snippet below.

# Allow anonymous FTP?

anonymous_enable=YES

…

# Uncomment this to allow local users to log in.

local_enable=YES

…

# Uncomment this to enable any form of FTP write command.

# (Needed even if you want local users to be able to upload files)

write_enable=YES

…

# Uncomment to allow the anonymous FTP user to upload files. This only

# has an effect if global write enable is activated. Also, you will

# obviously need to create a directory writable by the FTP user.

#anon_upload_enable=YES

…

# Uncomment this if you want the anonymous FTP user to be able to create

# new directories.

#anon_mkdir_write_enable=YES

…

# Activate logging of uploads/downloads.

xferlog_enable=YES

…

# You may override where the log file goes if you like.

# The default is shown# below.

#xferlog_file=/var/log/vsftpd.log

…

# The directory which vsftpd will try to change

# into after an anonymous login. (Default = /var/ftp)

#anon_root=/data/directory

To activate or deactivate a feature, remove or add the # at the beginning of the appropriate line.

Other vsftpd.conf Options

There are many other options you can add to this file:

o Limiting the maximum number of client connections (max_clients)

o Limiting the number of connections by source IP address (max_per_ip)

o The maximum rate of data transfer per anonymous login. (anon_max_rate)

o The maximum rate of data transfer per non-anonymous login. (local_max_rate)

Descriptions on this and more can be found in the vsftpd.conf man pages.

FTP Security Issues:

FTP has a number of security drawbacks, but you can overcome them in some cases. You can restrict an individual Linux user’s access to non-anonymous FTP, and you can change the configuration to not display the FTP server’s software version information, but unfortunately, though very convenient, FTP logins and data transfers are not encrypted.

The /etc/vsftpd.ftpusers File

For added security, you may restrict FTP access to certain users by adding them to the list of users in the /etc/vsftpd.ftpusers file. The VSFTPD package creates this file with a number of entries for privileged users that normally shouldn’t have FTP access. As FTP doesn’t encrypt passwords, thereby increasing the risk of data or passwords being compromised, it is a good idea to let these entries remain and add new entries for additional security.

Anonymous Upload

If you want remote users to write data to your FTP server, then you should create a write-only directory within /var/ftp/pub. This will allow your users to upload but not access other files uploaded by other users. The commands you need are:

[root@bigboy tmp]# mkdir /var/ftp/pub/upload

[root@bigboy tmp]# chmod 722 /var/ftp/pub/upload

FTP Greeting Banner

Change the default greeting banner in the vsftpd.conf file to make it harder for malicious users to determine the type of system you have. The directive in this file is.

ftpd_banner= New Banner Here

Using SCP As Secure Alternative To FTP

One of the disadvantages of FTP is that it does not encrypt your username and password. This could make your user account vulnerable to an unauthorized attack from a person eavesdropping on the network connection. Secure Copy (SCP) and Secure FTP (SFTP) provide encryption and could be considered as an alternative to FTP for trusted users. SCP does not support anonymous services, however, a feature that FTP does support.

Tutorial:

FTP has many uses, one of which is allowing numerous unknown users to download files. You have to be careful, because you run the risk of accidentally allowing unknown persons to upload files to your server. This sort of unintended activity can quickly fill up your hard drive with illegal software, images, and music for the world to download, which in turn can clog your server’s Internet access and drive up your bandwidth charges.

FTP Users with Only Read Access to a Shared Directory

In this example, anonymous FTP is not desired, but a group of trusted users need to have read only access to a directory for downloading files. Here are the steps:

1. Disable anonymous FTP. Comment out the anonymous_enable line in the vsftpd.conf file like this:

# Allow anonymous FTP?

# anonymous_enable=YES

2. Enable individual logins by making sure you have the local_enable line uncommented in the vsftpd.conf file like this:

# Uncomment this to allow local users to log in.

local_enable=YES

3. Start VSFTP.

[root@bigboy tmp]# service vsftpd start

4. Create a user group and shared directory. In this case, use /home/ftp-users and a user group name of ftp-users for the remote users

[root@bigboy tmp]# groupadd ftp-users

[root@bigboy tmp]# mkdir /home/ftp-docs

5. Make the directory accessible to the ftp-users group.

[root@bigboy tmp]# chmod 750 /home/ftp-docs

[root@bigboy tmp]# chown root:ftp-users /home/ftp-docs

6. Add users, and make their default directory /home/ftp-docs

[root@bigboy tmp]# useradd -g ftp-users -d /home/ftp-docs user1

[root@bigboy tmp]# useradd -g ftp-users -d /home/ftp-docs user2

[root@bigboy tmp]# useradd -g ftp-users -d /home/ftp-docs user3

[root@bigboy tmp]# useradd -g ftp-users -d /home/ftp-docs user4

[root@bigboy tmp]# passwd user1

[root@bigboy tmp]# passwd user2

[root@bigboy tmp]# passwd user3

[root@bigboy tmp]# passwd user4

7. Copy files to be downloaded by your users into the /home/ftp-docs directory

8. Change the permissions of the files in the /home/ftp-docs directory for read only access by the group

[root@bigboy tmp]# chown root:ftp-users /home/ftp-docs/*

[root@bigboy tmp]# chmod 740 /home/ftp-docs/*

Users should now be able to log in via FTP to the server using their new usernames and passwords. If you absolutely don’t want any FTP users to be able to write to any directory, then you should set the write_enable line in your vsftpd.conf file to no:

write_enable = NO

Remember, you must restart VSFTPD for the configuration file changes to take effect.

10. Connect to bigboy via FTP

[root@smallfry tmp]# ftp 192.168.1.100 (ip address of bigboy)

you will get a prompt like

ftp>

FTP commands and files:

/etc/ftpaccess : General configuration file: classes of users, access definitions, logging, etc.

Example:

class all real,guest,anonymous *

limit all 10 Any /etc/msgs/msg.dead

readme README* login

readme README* cwd=*

message /welcome.msg login

message .message cwd=*

compress yes all

tar yes all

log commands real

log transfers anonymous,real inbound,outbound

shutdown /etc/shutmsg

email user@hostname

/etc/ftphosts : Individual user host access to allow / deny a given username from an address.

# Example host access file

# Everything after a '#' is treated as comment,

# empty lines are ignored

allow bartm somehost.domain

deny fred otherhost.domain 131.211.32.*

/etc/ftpgroups : It allow us to set up groups of users.

/etc/ftpusers : Users who are not allowed to log in.

/etc/ftpconversions : Allows users to request specific on-the-fly conversions.

-

chroot – Run with a special root directory

-

ftpcount – Show number of concurrent users.

-

ftpshut – close down the ftp servers at a given time

-

ftprestart – Restart previously shutdown ftp servers

-

ftpwho – show current process information for each ftp user

Configuring Linux Mail Server

Email is an important part of any Web site you create. In a home environment, a free web based email service may be sufficient, but if you are running a business, then a dedicated mail server will probably be required.

This chapter will show you how to use sendmail to create a mail server that will relay your mail to a remote user’s mailbox or incoming mail to a local mailbox. You’ll also learn how to retrieve and send mail via your mail server using a with mail client such as Outlook Express or Evolution.

Configuring Sendmail

One of the tasks in setting up DNS for your domain (my-site.com) is to use the MX record in the configuration zone file to state the hostname of the server that will handle the mail for the domain. The most popular Unix mail transport agent is sendmail, but others, such as postfix and qmail, are also gaining popularity with Linux. The steps used to convert a Linux box into a sendmail mail server will be explained here.

How Sendmail Works

As stated before, sendmail can handle both incoming and outgoing mail for your domain. Take a closer look.

Usually each user in your home has a regular Linux account on your mail server. Mail sent to each of these users (username@my-site.com) eventually arrives at your mail server and sendmail then processes it and deposits it in the mailbox file of the user’s Linux account.

Mail isn’t actually sent directly to the user’s PC. Users retrieve their mail from the mail server using client software, such as Microsoft’s Outlook or Outlook Express that supports either the POP or IMAP mail retrieval protocols.

Linux users logged into the mail server can read their mail directly using a text-based client, such as mail, or a GUI client, such as Evolution. Linux workstation users can use the same programs to access their mail remotely.

The process is different when sending mail via the mail server. PC and Linux workstation users configure their e-mail software to make the mail server their outbound SMTP mail server.

If the mail is destined for a local user in the mysite.com domain, then sendmail places the message in that person’s mailbox so that they can retrieve it using one of the methods above.

If the mail is being sent to another domain, sendmail first uses DNS to get the MX record for the other domain. It then attempts to relay the mail to the appropriate destination mail server using the Simple Mail Transport Protocol (SMTP). One of the main advantages of mail relaying is that when a PC user A sends mail to user B on the Internet, the PC of user A can delegate the SMTP processing to the mail server.

Note: If mail relaying is not configured properly, then your mail server could be commandeered to relay spam. Simple sendmail security will be covered later.

Sendmail Macros

When mail passes through a sendmail server the mail routing information in its header is analyzed, and sometimes modified, according to the desires of the systems administrator. Using a series of highly complicated regular expressions listed in the /etc/mail/sendmail.cf file, sendmail inspects this header and then acts accordingly.

In recognition of the complexity of the /etc/mail/sendmail.cf file, a much simpler file named /etc/sendmail.mc was created, and it contains more understandable instructions for systems administrators to use. These are then interpreted by a number of macro routines to create the sendmail.cf file. After editing sendmail.mc, you must always run the macros and restart sendmail for the changes to take effect.

Each sendmail.mc directive starts with a keyword, such as DOMAIN, FEATURE, or OSTYPE, followed by a subdirective and in some cases arguments. A typical example is.

FEATURE(`virtusertable’,`hash -o /etc/mail/virtusertable.db’)dnl

The keywords usually define a subdirectory in the /usr/share/sendmail-cf file in which the macro may be found and the subdirective is usually the name of the macro file itself. So in the example, the macro name is /usr/share/sendmail-cf/feature/virtusertable.m4, and the instruction ` hash -o /etc/mail/virtusertable.db’ is being passed to it.

Notice that sendmail is sensitive to the quotation marks used in the m4 macro directives. They open with a grave mark and end with a single quote.

FEATURE(`masquerade_envelope‘)dnl

Some keywords, such as define for the definition of certain sendmail variables and MASQUERADE_DOMAIN, have no corresponding directories with matching macro files. The macros in the /usr/share/sendmail-cf/m4 directory deal with these.

Once you finish editing the sendmail.mc file, you can then execute the make command while in the /etc/mail directory to regenerate the new sendmail.cf file.

[root@bigboy tmp]# cd /etc/mail

[root@bigboy mail]# make

If there have been no changes to the files in /etc/mail since the last time make was run, then you’ll get an error like this:

[root@bigboy mail]# make

make: Nothing to be done for `all’.

[root@bigboy mail]#

The make command actually generates the sendmail.cf file using the m4 command. The m4 usage is simple, you just specify the name of the macro file as the argument, in this case sendmail.mc, and redirect the output, which would normally go to the screen, to the sendmail.cf file with the ">" redirector symbol.

# m4 /etc/mail/sendmail.mc > /etc/mail/sendmail.cf

I’ll discuss many of the features of the sendmail.mc file later in the chapter.

Most RedHat and Fedora Linux software products are available in the RPM format. You will need to make sure that the sendmail, sendmail-cf, and m4 software RPMs are installed. (Chapter 6, "Installing RPM Software" will tell you how.) When searching for the RPMs, remember that the filename usually starts with the software package name by a version number, as in sendmail-8.12.10-1.1.1.i386.rpm.

You can use the chkconfig command to get sendmail configured to start at boot:

[root@bigboy tmp]# chkconfig sendmail on

To start, stop, and restart sendmail after booting, use

[root@bigboy tmp]# service sendmail start

[root@bigboy tmp]# service sendmail stop

[root@bigboy tmp]# service sendmail restart

Remember to restart the sendmail process every time you make a change to the configuration files for the changes to take effect on the running process. You can also test whether the sendmail process is running with the pgrep command:

[root@bigboy tmp]# pgrep sendmail

You should get a response of plain old process ID numbers:

How to Restart Sendmail after Editing Your Configuration Files

In this chapter, you’ll see that sendmail uses a variety of configuration files that require different treatments for their commands to take effect. This little script encapsulates all the required post configuration steps.

#!/bin/bash

cd /etc/mail

make

newaliases

/etc/init.d/sendmail restart

It first runs the make command, which creates a new sendmail.cf file from the sendmail.mc file and compiles supporting configuration files in the /etc/mail directory according to the instructions in the file /etc/mail/Makefile. It then generates new e-mail aliases with the newaliases command, (this will be covered later), and then restarts sendmail.

Use this command to make the script executable.

chmod 700 filename

You’ll need to run the script each time you change any of the sendmail configuration files described in the sections to follow.

The line in the script that restarts sendmail is only needed if you have made changes to the /etc/mail/sendmail.mc file, but I included it so that you don’t forget. This may not be a good idea in a production system. Delete the appropriate m4 line depending on your version of Linux.

Note: When sendmail starts, it reads the file sendmail.cf for its configuration. sendmail.mc is a more user friendly configuration file and really is much easier to fool around with without getting burned. The sendmail.cf file is located in different directories depending on the version of RedHat you use. The /etc/sendmail.cf file is used for versions up to 7.3, and /etc/mail/sendmail.cf is used for versions 8.0 and higher and Fedora Core.

The /etc/mail/sendmail.mc File

You can define most of sendmail’s configuration parameters in the /etc/mail/sendmail.mc file, which is then used by the m4 macros to create the /etc/mail/sendmail.cf file. Configuration of the sendmail.mc file is much simpler than configuration of sendmail.cf, but it is still often viewed as an intimidating task with its series of structured directive statements that get the job done. Fortunately, in most cases you won’t have to edit this file very often.

How to Put Comments in sendmal.mc

In most Linux configuration files a # symbol is used at the beginning of a line convert it into a comment line or to deactivate any commands that may reside on that line.

The sendmail.mc file doesn’t use this character for commenting, but instead uses the string "dnl". Here are some valid examples of comments used with the sendmail.mc configuration file:

These statements are disabled by dnl commenting.

dnl DAEMON_OPTIONS(`Port=smtp,Addr=127.0.0.1, Name=MTA’)

dnl # DAEMON_OPTIONS(`Port=smtp,Addr=127.0.0.1, Name=MTA’)

This statement is incorrectly disabled:

# DAEMON_OPTIONS(`Port=smtp,Addr=127.0.0.1, Name=MTA’)

This statement is active:

DAEMON_OPTIONS(`Port=smtp,Addr=127.0.0.1, Name=MTA’)

Remember that you will never receive mail unless you have configured DNS for your domain to make your new Linux box mail server the target of the DNS domain’s MX record.

Configure Your Mail Server’s Name In DNS

You first need to make sure that your mail server’s name resolves in DNS correctly. For example, if your mail server’s name is bigboy and it you intend for it to mostly handle mail for the domain my-site.com, then bigboy.my-site.com must correctly resolve to the IP address of one of the mail server’s interfaces. You can test this using the host command:

[root@smallfry tmp]# host bigboy.my-site.com

bigboy.my-site.com has address 172.16.1.100

[root@smallfry tmp]#

You will need to fix your DNS server’s entries if the resolution isn’t correct.

Configure The /etc/resolv.conf File

The sendmail program expects DNS to be configured correctly on the DNS server. The MX record for your domain must point to the IP address of the mail server.

The program also expects the files used by the mail server’s DNS client to be configured correctly. The first one is the /etc/resolv.conf file in which there must be a domain directive that matches one of the domains the mail server is expected to handle mail for.

Finally, sendmail expects a nameserver directive that points to the IP address of the DNS server the mail server should use to get its DNS information.

For example, if the mail server is handling mail for my-site.com and the IP address of the DNS server is 192.168.1.100, there must be directives that look like this:

domain my-site.com

nameserver 192.168.1.100

An incorrectly configured resolv.conf file can lead to errors when running the m4 command to process the information in your sendmail.mc file.

WARNING: local host name (smallfry) is not qualified; fix $j in config file

The /etc/hosts file also is used by DNS clients and also needs to be correctly configured. Here is a brief example of the first line you should expect to see in it:

127.0.0.1 bigboy.my-site.com localhost.localdomain localhost bigboy

The entry for 127.0.0.1 must always be followed by the fully qualified domain name (FQDN) of the server. In the case above it would be bigboy.my-site.com. Then you must have an entry for localhost and localhost.localdomain. Linux does not function properly if the 127.0.0.1 entry in /etc/hosts doesn’t also include localhost and localhost.localdomain. Finally you can add any other aliases your host may have to the end of the line.

How To Configure Linux Sendmail Clients

All Linux mail clients in your home or company need to know which server is the mail server. This is configured in the sendmail.mc file by setting the SMART_HOST statement to include the mail server. In the example below, the mail server has been set to mail.my-site.com, the mail server for the my-site.com domain.

define(`SMART_HOST’,`mail.my-site.com’)

If you don’t have a mail server on your network, you can either create one, or use the one offered by your ISP.

Once this is done, you need to process the sendmail.mc file and restart sendmail. To do this, run the restarting script we from earlier in the chapter.

If the sendmail server is a Linux server, then the /etc/hosts file will also have to be correctly configured too.

Converting From a Mail Client to a Mail Server

All Linux systems have a virtual loopback interface that lives only in memory with an IP address of 127.0.0.1. As mail must be sent to a target IP address even when there is no NIC in the box, sendmail therefore uses the loopback address to send mail between users on the same Linux server. To become a mail server, and not a mail client, sendmail needs to be configured to listen for messages on NIC interfaces as well.

1. Determine which NICs sendmail is running on. You can see the interfaces on which sendmail is listening with the netstat command. Because sendmail listens on TCP port 25, you use netstat and grep for 25 to see a default configuration listening only on IP address 127.0.0.1 (loopback):

[root@bigboy tmp]# netstat -an | grep :25 | grep tcp

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN

[root@bigboy tmp]#

2. Edit sendmail.mc to make sendmail listen on all interfaces. If sendmail is listening on the loopback interface only, you should comment out the daemon_options line in the /etc/mail/sendmail.mc file with dnl statements. It is also good practice to take precautions against spam by not accepting mail from domains that don’t exist by commenting out the accept_unresolvable_domains feature too. See the fourth and next to last lines in the example.

dnl This changes sendmail to only listen on the loopback device 127.0.0.1

dnl and not on any other network devices. Comment this out if you want

dnl to accept email over the network.

dnl DAEMON_OPTIONS(`Port=smtp,Addr=127.0.0.1, Name=MTA’)

dnl NOTE: binding both IPv4 and IPv6 daemon to the same port requires

dnl a kernel patch

dnl DAEMON_OPTIONS(`port=smtp,Addr=::1, Name=MTA-v6, Family=inet6′)

dnl We strongly recommend to comment this one out if you want to protect

dnl yourself from spam. However, the laptop and users on computers that do

dnl not have 24×7 DNS do need this.

dnl FEATURE(`accept_unresolvable_domains’)dnl

dnl FEATURE(`relay_based_on_MX’)dnl

Note: You need to be careful with the accept_unresolvable_names feature. In the sample network, bigboy the mail server does not accept e-mail relayed from any of the other PCs on your network if they are not in DNS.

Note: If your server has multiple NICs and you want it to listen to one of them, then you can uncomment the localhost DAEMON_OPTIONS entry and add another one for the IP address of the NIC on which to wish to accept SMTP traffic.

3. Comment out the SMART_HOST Entry in sendmal.mc. The mail server doesn’t need a SMART_HOST entry in its sendmail.mc file. Comment this out with a dnl at the beginning.

dnl define(`SMART_HOST’,`mail.my-site.com’)

4. Regenerate the sendmail.cf file, and restart sendmail. Again, you can do this with the restart script from the beginning of the chapter.

5. Make sure sendmail is listening on all interfaces (0.0.0.0).

[root@bigboy tmp]# netstat -an | grep :25 | grep tcp

tcp 0 0 0.0.0.0:25 0.0.0.0:* LISTEN

You have now completed the first phase of converting your Linux server into a sendmail server by enabling it to listen to SMTP traffic on its interfaces. The following sections will show you how to define what type of mail it should handle and the various ways this mail can be processed.

A General Guide To Using The sendmail.mc File

The sendmail.mc file can seem jumbled. To make it less cluttered I usually create two easily identifiable sections in it with all the custom commands I’ve ever added.

The first section is near the top where the FEATURE statements usually are, and the second section is at the very bottom.

Sometimes sendmail will archive this file when you do a version upgrade. Having easily identifiable modifications in the file will make post upgrade reconfiguration much easier. Here is a sample:

dnl ***** Customised section 1 start *****

dnl

FEATURE(delay_checks)dnl

FEATURE(masquerade_envelope)dnl

FEATURE(allmasquerade)dnl

FEATURE(masquerade_entire_domain)dnl

dnl

dnl ***** Customised section 1 end *****

The /etc/mail/relay-domains File

The /etc/mail/relay-domains file is used to determine domains from which it will relay mail. The contents of the relay-domains file should be limited to those domains that can be trusted not to originate spam. By default, this file does not exist in a standard RedHat / Fedora install. In this case, all mail sent from my-super-duper-site.com and not destined for this mail server will be forwarded:

my-super-duper-site.com

One disadvantage of this file is that controls mail based on the source domain only, and source domains can be spoofed by spam e-mail servers. The /etc/mail/access file has more capabilities, such as restricting relaying by IP address or network range and is more commonly used. If you delete /etc/mail/relay-domains, then relay access is fully determined by the /etc/mail/access file.

Be sure to run the restart sendmail script from the beginning of the chapter for these changes to take effect.

You can make sure that only trusted PCs on your network have the ability to relay mail via your mail server by using the /etc/mail/access file. That is to say, the mail server will relay mail only for those PCs on your network that have their e-mail clients configured to use the mail server as their outgoing SMTP mail server. (In Outlook Express, you set this using: Tools>Accounts>Properties>Servers)

If you don’t take the precaution of using this feature, you may find your server being used to relay mail for spam e-mail sites. Configuring the /etc/mail/access file will not stop spam coming to you, only spam flowing through you.

The /etc/mail/access file has two columns. The first lists IP addresses and domains from which the mail is coming or going. The second lists the type of action to be taken when mail from these sources or destinations is received. Keywords include RELAY, REJECT, OK (not ACCEPT), and DISCARD. There is no third column to state whether the IP address or domain is the source or destination of the mail, sendmail assumes it could be either and tries to match both. All other attempted relayed mail that doesn’t match any of the entries in the /etc/mail/access file, sendmail will reject. Despite this, my experience has been that control on a per e-mail address basis is much more intuitive via the /etc/mail/virtusertable file.

The sample file that follows allows relaying for only the server itself (127.0.0.1, localhost), two client PCs on your home 192.168.1.X network, everyone on your 192.168.2.X network, and everyone passing e-mail through the mail server from servers belonging to my-site.com. Remember that a server will be considered a part of my-site.com only if its IP address can be found in a DNS reverse zone file:

localhost.localdomain RELAY

localhost RELAY

127.0.0.1 RELAY

192.168.1.16 RELAY

192.168.1.17 RELAY

192.168.2 RELAY

my-site.com RELAY

You’ll then have to convert this text file into a sendmail readable database file named /etc/mail/access.db. Here are the commands you need:

[root@bigboy tmp]# cd /etc/mail

[root@bigboy mail]# make

The sendmail restart script we configured at the beginning of the chapter does this for you too.

Remember that the relay security features of this file may not work if you don’t have a correctly configured /etc/hosts file.

The /etc/mail/local-host-names File

When sendmail receives mail, it needs a way of determining whether it is responsible for the mail it receives. It uses the /etc/mail/local-host-names file to do this. This file has a list of hostnames and domains for which sendmail accepts responsibility. For example, if this mail server was to accept mail for the domains my-site.com and another-site then the file would look like this:

my-site.com

another-site.com

In this case, remember to modify the MX record of the another-site.com DNS zonefile point to my-site.com. Here is an example (Remember each "." is important):

another-site.com. MX 10 mail.my-site.com. ; Primary Mail Exchanger

; for another-site.com

Which User Should Really Receive The Mail?

After checking the contents of the virtusertable, sendmail checks the aliases files to determine the ultimate recipient of mail.

The /etc/mail/virtusertable file

The /etc/mail/virtusertable file contains a set of simple instructions on what to do with received mail. The first column lists the target email address and the second column lists the local user’s mail box, a remote email address, or a mailing list entry in the /etc/aliases file to which the email should be forwarded.

If there is no match in the virtusertable file, sendmail checks for the full email address in the /etc/aliases file.

webmaster@another-site.com webmasters

@another-site.com marc

sales@my-site.com sales@another-site.com

paul@my-site.com paul

finance@my-site.com paul

@my-site.com error:nouser User unknown

In this example, mail sent to:

> webmaster@another-site.com will go to local user (or mailing list) webmasters, all other mail to another-site.com will go to local user marc.

> sales at my-site.com will go to the sales department at my-othersite.com.

> paul and finance at my-site.com goes to local user (or mailing list) paul

All other users at my-site.com receive a bounce back message stating "User unknown".

After editing the /etc/mail/virtusertable file, you have to convert it into a sendmail-readable database file named /etc/mail/virtusertable.db with two commands:

[root@bigboy tmp]# cd /etc/mail

[root@bigboy mail]# make

If these lines look like you’ve seen them before, you have: They’re in your all-purpose sendmail restart script.

You can think of the /etc/aliases file as a mailing list file. The first column has the mailing list name (sometimes called a virtual mailbox), and the second column has the members of the mailing list separated by commas.

To start, sendmail searches the first column of the file for a match. If there is no match, then sendmail assumes the recipient is a regular user on the local server and deposits the mail in their mailbox.

If it finds a match in the first column, sendmail notes the nickname entry in the second column. It then searches for the nickname again in the first column to see if the recipient isn’t on yet another mailing list.

If sendmail doesn’t find a duplicate, it assumes the recipient is a regular user on the local server and deposits the mail in their mailbox.

If the recipient is a mailing list, then sendmail goes through the process all over again to determine if any of the members is on yet another list, and when it is all finished, they all get a copy of the e-mail message.

In the example that follows, you can see that mail sent to users bin, daemon, lp, shutdown, apache, named, and so on by system processes will all be sent to user (or mailing list) root. In this case, root is actually an alias for a mailing list consisting of user marc and webmaster@my-site.com.

Note: The default /etc/aliases file installed with RedHat / Fedora has the last line of this sample commented out with a #, you may want to delete the comment and change user marc to another user. Also after editing this file, you’ll have to convert it into a sendmail readable database file named /etc/aliases.db. Here is the command to do that:

[root@bigboy tmp]# newaliases

# Basic system aliases — these MUST be present.

mailer-daemon: postmaster

postmaster: root

# General redirections for pseudo accounts.

bin: root

daemon: root

…

abuse: root

# trap decode to catch security attacks

decode: root

# Person who should get root’s mail

root: marc,webmaster@my-site.com

Notice that there are no spaces between the mailing list entries for root: You will get errors if you add spaces.

In this simple mailing list example, mail sent to root actually goes to user account marc and webmaster@my-site.com. Because aliases can be very useful, here are a few more list examples for your /etc/aliases file.

> Mail to "directors@my-site.com" goes to users "peter", "paul" and "mary".

# Directors of my SOHO company

directors: peter,paul,mary

> Mail sent to "family@my-site.com" goes to users "grandma", "brother" and "sister"

# My family

family: grandma,brother,sister

> Mail sent to admin-list gets sent to all the users listed in the file /home/mailings/admin-list.

# My mailing list file

admin-list: ":include:/home/mailings/admin-list"

The advantage of using mailing list files is that the admin-list file can be a file that trusted users can edit, user root is only needed to update the aliases file. Despite this, there are some problems with mail reflectors. One is that bounce messages from failed attempts to broadcast go to all users. Another is that all subscriptions and unsubscriptions have to be done manually by the mailing list administrator. If either of these are a problem for you, then consider using a mailing list manager, such as majordomo.

One important note about the /etc/aliases file: By default your system uses sendmail to mail system messages to local user root. When sendmail sends e-mail to a local user, the mail has no To: in the e-mail header. If you then use a mail client with a spam mail filtering rule to reject mail with no To: in the header, such as Outlook Express or Evolution, you may find yourself dumping legitimate mail.

To get around this, try making root have an alias for a user with a fully qualified domain name, this forces sendmail to insert the correct fields in the header; for example:

# Person who should get root’s mail

root: webmaster@my-site.com

Sendmail Masquerading Explained

If you want your mail to appear to come from user@mysite.com and not user@bigboy.mysite.com, then you have two choices:

o Configure your email client, such as Outlook Express, to set your email address to user@mysite.com. (I’ll explain this in the "Configuring Your POP Mail Server" section.).

o Set up masquerading to modify the domain name of all traffic originating from and passing trough your mail server.

In the DNS configuration, you made bigboy the mail server for the domain my-site.com. You now have to tell bigboy in the sendmail configuration file sendmail.mc that all outgoing mail originating on bigboy should appear to be coming from my-site.com; if not, based on our settings in the /etc/hosts file, mail will appear to come from mail.my-site.com. This isn’t terrible, but you may not want your Web site to be remembered with the word "mail" in front of it. In other words you may want your mail server to handle all email by assigning a consistent return address to all outgoing mail, no matter which server originated the email.

You can solve this by editing your sendmail.mc configuration file and adding some masquerading commands and directives:

FEATURE(always_add_domain)dnl

FEATURE(`masquerade_entire_domain’)dnl

FEATURE(`masquerade_envelope’)dnl

FEATURE(`allmasquerade’)dnl

MASQUERADE_AS(`my-site.com’)dnl

MASQUERADE_DOMAIN(`my-site.com.’)dnl

MASQUERADE_DOMAIN(localhost)dnl

MASQUERADE_DOMAIN(localhost.localdomain)dnl

The result is that:

The MASQUERADE_AS directive makes all mail originating on bigboy appear to come from a server within the domain my-site.com by rewriting the email header.

The MASQUERADE_DOMAIN directive makes mail relayed via bigboy from all machines in the another-site.com and localdomain domains appear to come from the MASQUERADE_AS domain of my-site.com. Using DNS, sendmail checks the domain name associated with the IP address of the mail relay client sending the mail to help it determine whether it should do masquerading or not.

FEATURE masquerade_entire_domain makes sendmail masquerade servers named *my-site.com, and *another-site.com as my-site.com. In other words, mail from sales.my-site.com would be masqueraded as my-site.com. If this wasn’t selected, then only servers named my-site.com and my-othersite.com would be masqueraded. Use this with caution when you are sure you have the necessary authority to do this.

FEATURE allmasquerade makes sendmail rewrite both recipient addresses and sender addresses relative to the local machine. If you cc: yourself on an outgoing mail, the other recipient sees a cc: to an address he knows instead of one on localhost.localdomain.

Note: Use FEATURE allmasquerade with caution if your mail server handles email for many different domains and the mailboxes for the users in these domains reside on the mail server. The allmasquerade statement causes all mail destined for these mailboxes to appear to be destined for users in the domain defined in the MASQUERADE_AS statement. In other words, if MASQUERADE_AS is my-site.com and you use allmasquerade, then mail for peter@another-site.com enters the correct mailbox but sendmail rewrites the To:, making the e-mail appear to be sent to peter@my-ste.com originally.

FEATURE always_add_domain always masquerades email addresses, even if the mail is sent from a user on the mail server to another user on the same mail server.

FEATURE masquerade_envelope rewrites the email envelope just as MASQUERADE_AS rewrote the header.

Masquerading is an important part of any mail server configuration as it enables systems administrators to use multiple outbound mail servers, each providing only the global domain name for a company and not the fully qualified domain name of the server itself. All email correspondence then has a uniform email address format that complies with the company’s brand marketing policies.

Note: E-mail clients, such as Outlook Express, consider the To: and From: statements as the e-mail header. When you choose Reply or Reply All in Outlook Express, the program automatically uses the To: and From: in the header. It is easy to fake the header, as spammers often do; it is detrimental to e-mail delivery, however, to fake the envelope.

The e-mail envelope contains the To: and From: used by mailservers for protocol negotiation. It is the envelope’s From: that is used when e-mail rejection messages are sent between mail servers.

The best way of testing masquerading from the Linux command line is to use the "mail -v username" command. I have noticed that "sendmail -v username" ignores masquerading altogether. You should also tail the /var/log/maillog file to verify that the masquerading is operating correctly and check the envelope and header of test email received by test email accounts.

By default, user "root" will not be masqueraded. To remove this restriction use:

EXPOSED_USER(`root’)dnl

command in /etc/mail/sendmail.mc. You can comment this out if you like with a "dnl" at the beginning of the line and running the sendmail start script.

Using Sendmail to Change the Sender’s Email Address

Sometimes masquerading isn’t enough. At times you may need to change not only the domain of the sender but also the username portion of the sender’s e-mail address. For example, perhaps you bought a program for your SOHO office that sends out notifications to your staff, but the program inserts its own address as sender’s address, not that of the IT person.

Web-based CGI scripts tend to run as user apache and, therefore, send mail as user apache too. Often you won’t want this, not only because apache’s e-mail address may not be a suitable, but also because some anti-spam programs check to ensure that the From:, or source e-mail address, actually exists as a real user. If your virtusertable file allows e-mail to only predefined users, then queries about the apache user will fail, and your valid e-mail may be classified as being spam.

With sendmail, you can change both the domain and username on a case-by-case basis using the genericstable feature:

6. Add these statements to your /etc/mail/sendmail.mc file to activate the feature:

FEATURE(`genericstable’,`hash -o /etc/mail/genericstable.db’)dnl

GENERICS_DOMAIN_FILE(`/etc/mail/generics-domains’)dnl